Hardworking Students plus State-of-the-art Equipment equals Success

Kongsberg has placed over a million euros worth of equipment at the disposal of Turku University of Applied Sciences [1]. This equipment set is utilized to create a sensor platform, which supports development of autonomous and remotely operated maritime traffic in the ARPA project. The equipment includes a wide range of different cameras, sensors and detectors.

In fall 2021, a group of fourth year students from the embedded software & IoT study path participated in the ARPA project through a research and development course worth 15 ECTS credits (System Design in Figure 1). The research topics of ARPA are a perfect fit for the students at this late phase of their studies. In autonomous systems, computer science meets the physical world, leading to fascinating cross-disciplinary topics, like sensor fusion, machine learning (ML) and artificial intelligence (AI).

Figure 1. The study plan of embedded systems students. The research and development course takes place during the autumn of the fourth study year.

During the R&D Project course, the students focused on two main tracks related to the ARPA sensor platform design: environmental sensing with a state-of-the-art weather station and object detection with LiDAR (light detection and ranging) devices.

Experimenting with the weather station

Figure 2. A state-of-the-art weather station and LiDAR installed at the roof of EDUCity building in Turku, Finland.

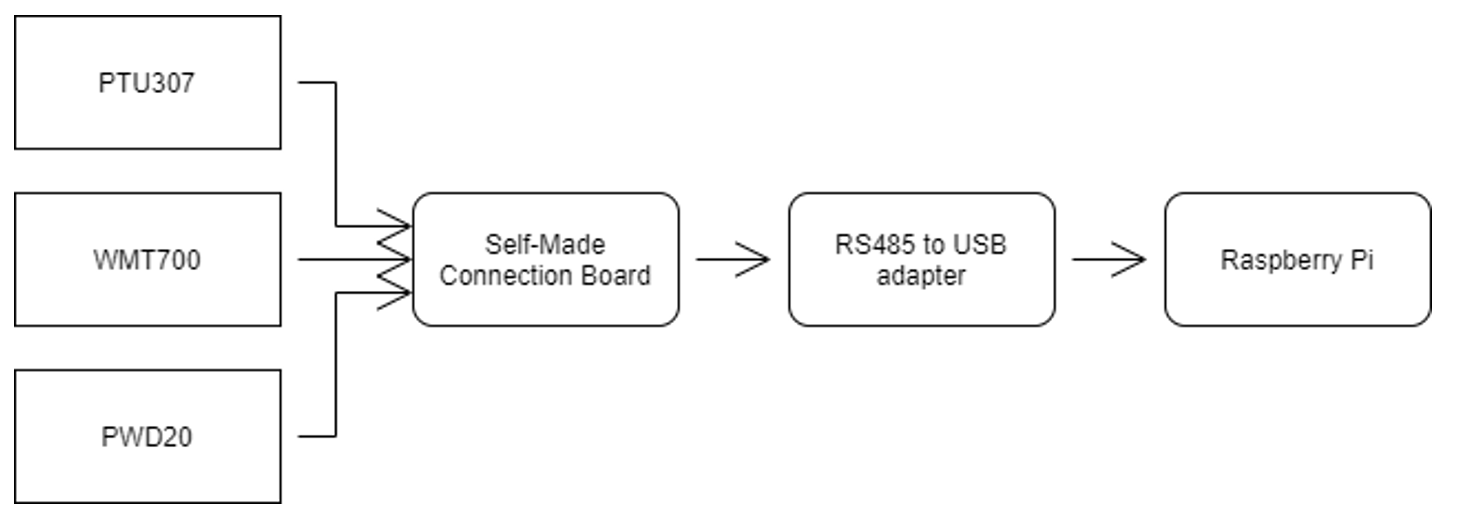

The student group implemented the necessary hardware and software components enabling sensor data storing to a database and further visualization. The sensors of the weather station cover temperature, humidity, visibility, air pressure, wind speed and wind direction – which all are read over a (RS-485) serial interface by a small, embedded computer, RaspberryPi. The block diagram of the weather station is shown in Figure 3.

Figure 3. Block diagram of the weather station.

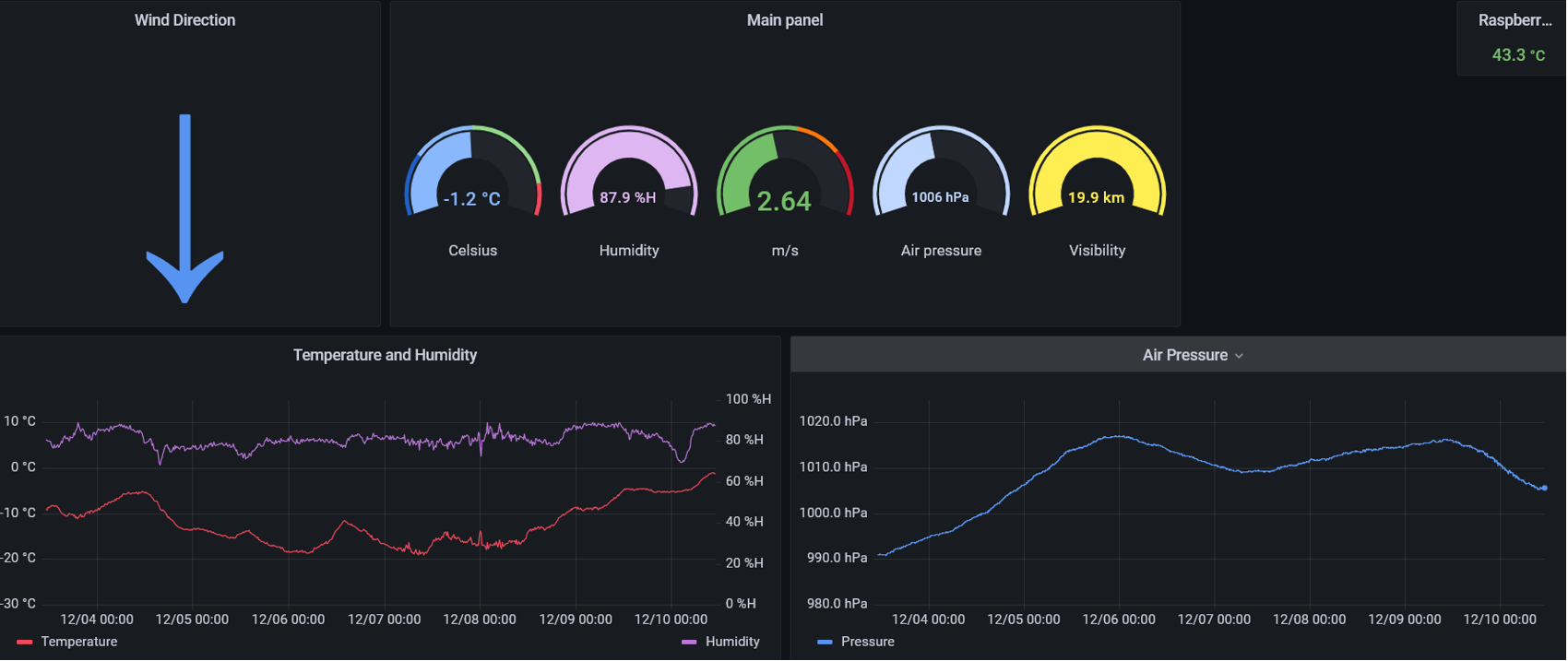

The acquired data is further written to a database and visualized with Grafana data visualization platform (Figure 4). Together with LiDAR data, one could gain information on LiDAR object recognition capabilities under varying weather conditions – sensor fusion indeed!

Figure 4. Collected weather data shown in the Grafana data visualization platform.

LiDAR and object recognition

An earlier ARPA blog post ”Collecting Sensor Fusion Data for Autonomous Systems Research” [4] outlined the challenges with LiDAR devices and object recognition: the machine learning models must be trained before they can be applied, and training the model requires large, annotated/labelled data sets.

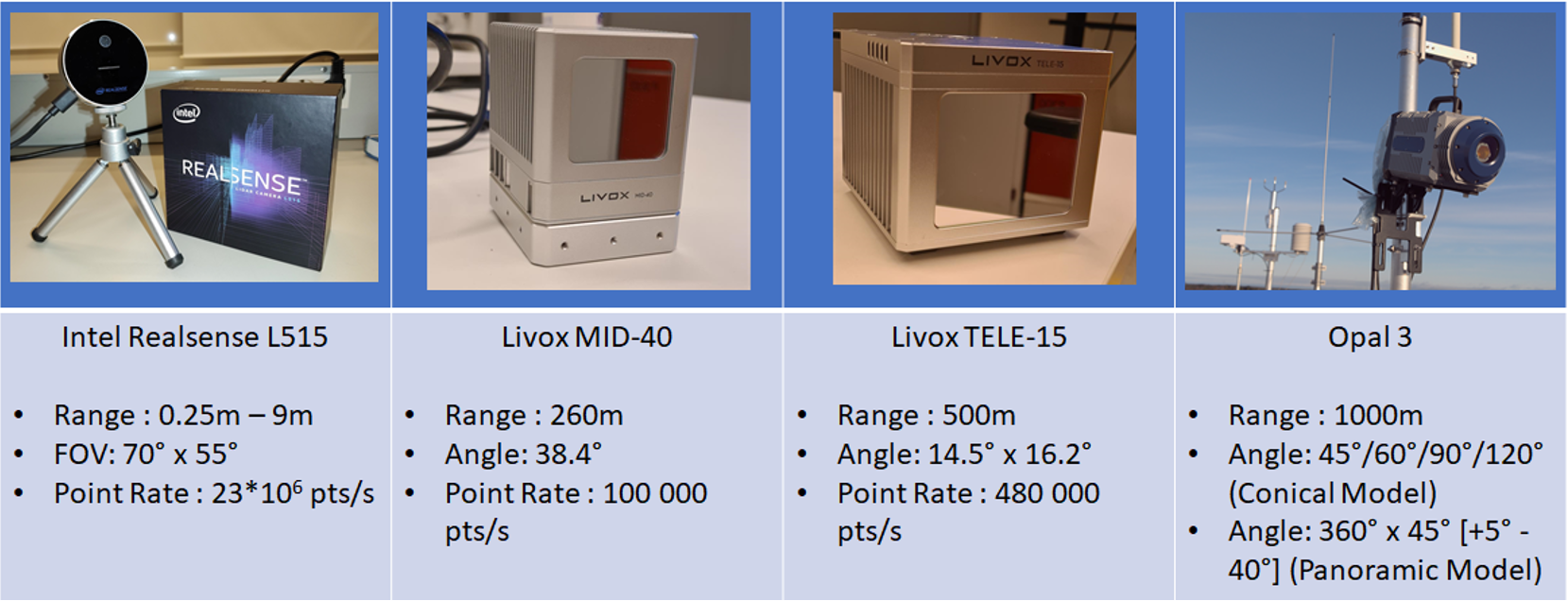

In the student project, several LiDARs from different manufacturers were used to collect the data (Figure 5).

Figure 5. Various LiDARs used in the project.

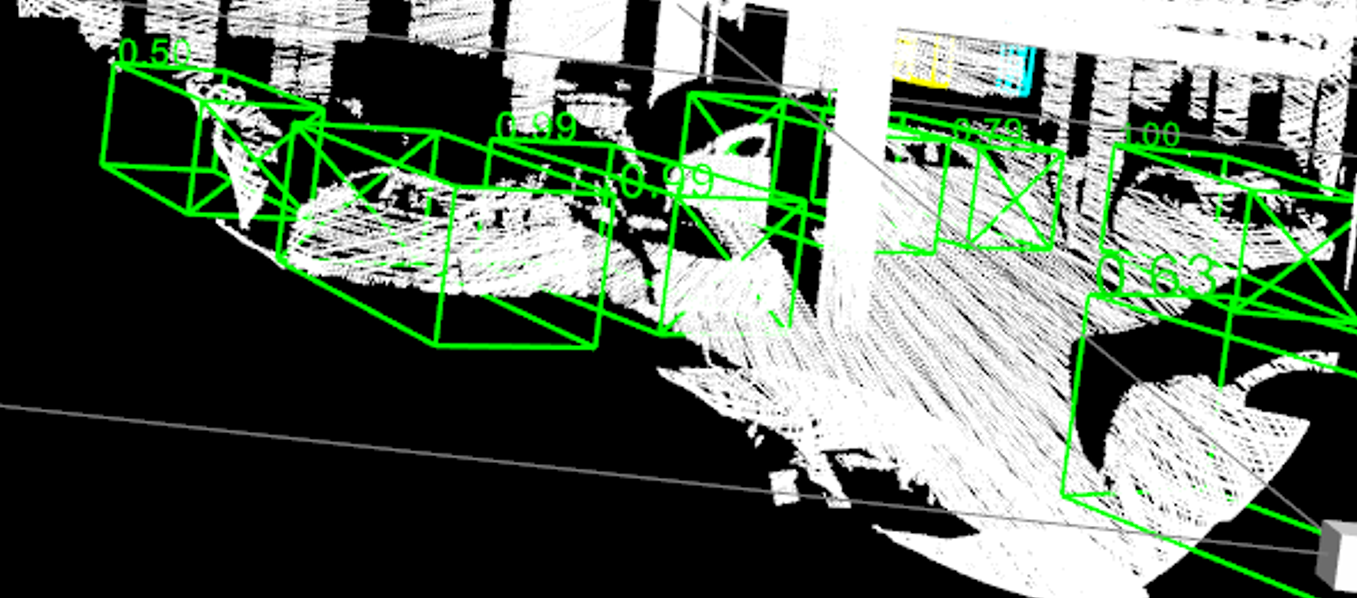

A pre-trained neural network was used to detect cars in the parking hall under ICT-City. The number associated with the green bounding boxes shows the certainty level of a recognized object (Figure 6).

Figure 6. Point cloud captured in ICT-city parking hall, with recognized objects overlay.

Finally, LiDAR data was fused with live video to recognize and classify objects on live video image and measure the distance to it (Figure 7).

Figure 7. Real time video augmented with object recognition/classification and distance measurement.

The integration of the ARPA R&D team and teaching activities continues within an Innovation Project (Capstone) [2] with third year students, where students from different study paths and disciplines are mixed. Currently, two student groups are involved. The first group is working on object recognition with LiDARs, involving data collection, AI algorithm trials and data annotation/labelling (Figure 5). The second group focuses on sensor fusion, especially on fusing the 2D image with 3D LiDAR point cloud.

Figure 8. Students of a Capstone project working on LiDAR object recognition.

During these student projects, the complexity of a full-blown autonomous vehicle has become crystal-clear. It requires a lot of skills from different areas in ICT, a lot of work and sweat. Still, the moment when the bounding box matches the (obvious) object on the blurry image is priceless!

Jarno Tuominen, Senior Lecturer, Turku University of Applied Sciences

Juha Kalliovaara, Senior Researcher, Turku University of Applied Sciences

References

[1] Cooperation between Turku UAS and Kongsberg accelerates development work on autonomous vehicles: https://www.tuas.fi/en/news/467/cooperation-between-turku-uas-and-kongsberg-accelerates-development-work-autonomous-vehicles/

[2] https://innovaatioprojektit.turkuamk.fi/en/capstone/capstone-new-projects-start/

[3] Turun AMK saa Kongsbergiltä lainaan miljoonan euron arvoista tutkimuslaitteistoa itseohjautuvien laivojen kehittämiseen: https://yle.fi/uutiset/3-12091910