Collecting Sensor Fusion Data for Autonomous Systems Research

Introduction

Digitalization and increased levels of autonomy in transport are expected to take leaps forward in the coming years. This development can help in creating sustainable, safer, more efficient, and more reliable service chains for a better quality of life and global prosperity.

As the most efficient long-distance transport method, sea transport is carrying about 90 % of the world trade [1]. With the constantly growing maritime traffic, safety and security are becoming a key issue, to which autonomous operation of maritime vessels is globally recognized as a potential solution.

To enable autonomous operation and situational awareness, methods for defining the ship state, navigation, collision avoidance [2], wireless communication systems, sensors, and sensor fusion are required. The technologies needed for sufficient situational awareness for autonomous operations already exist: e.g. artificial intelligence (AI) and deep learning in addition to sensor fusion. The challenge lies in how to utilize and combine these existing technologies.

The Applied Research Platform for Autonomous Systems (ARPA) project aims to produce open data for the research of sensor fusion algorithms relating to improved situational awareness. In multimodal sensor fusion, we combine information from several types of sensors to overcome weaknesses in different sensor types to ultimately obtain more robust situational awareness. Below you can find a brief description of sensor fusion as an approach as well as the different types of sensors, from which data will be collected in the ARPA project.

Multimodal sensor fusion

Autonomous vessels need to obtain accurate situational awareness to support their decision-making. The challenge in situational awareness is the lack of information about vessel surroundings. Thus, we need to collect data about the surroundings from different types of sensors.

Object detection using computer vision in maritime environment is a very challenging task due to varying light, view distances, weather conditions and sea waves, to name but a few. False detections might also occur due to light reflection, camera motion and illumination changes. No single sensor can guarantee sufficient reliability or accuracy in all different situations.

Sensor fusion can address this challenge by combining data from different sensors and by providing complementary information about the surrounding environment. In sensor fusion, data is combined from multiple high-bandwidth perceptual sensors such as visible light and thermal camera arrays, radars, and Lidars (Light detection and ranging). Features can be extracted and classified from the obtained data by using for example deep neural networks. This obtained semantic information can then be combined with spatial information from positioning sensors such as inertial measurement unit (IMU), Global Navigation Satellite Systems (GNSS), and Automatic Identification System (AIS) as well as map data.

Implementing sensor fusion in real time requires a significant amount of computing power, which means that sensor fusion can also benefit from the processing power available in cloud environments – instead of processing all the data on-board. This of course presumes the availability of sufficient wireless connectivity to transfer the sensor data. The amount of transmitted data can be reduced by pre-processing on-board.

Previous work on the subject has concentrated on sensor fusion methods for visible light and thermal cameras [3]–[5], since such cameras are cheap and the data annotation is relatively easy when compared to Lidar point clouds [6]. Even though visible light cameras have very high resolutions, poor weather and illumination changes can easily distort the produced image. To tackle this, their data can be fused with data from thermal cameras. Thermal cameras capture information on the relative temperature of objects, which allows to distinguish warm objects from cold objects in all weather and time conditions. However, even with the state-of-the-art methods for visible light and thermal camera sensor fusion, it is still difficult to detect very small objects.

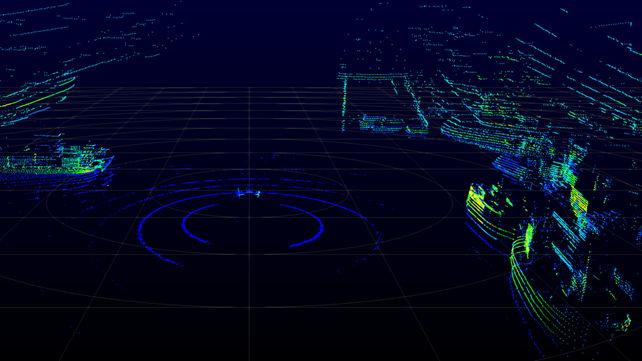

Lidars produce three-dimensional point cloud presentations of their surroundings. Even though Lidar point clouds do not contain information on the object colour or fine surface details, the data can be used for object detection using neural networks [7]. Like in the case of camera images, the depth information from Lidars produces a better understanding on the environment of the vessel when fused with data from other sensors. However, a significant issue is that the point cloud presentations are difficult to reconstruct in poor weather conditions. Lidar data annotation is also a complex task. The development of semi-supervised machine learning (ML) methods for Lidar data annotation could be a possible solution to automatize this task. A Lidar point cloud presentation from Aurajoki surroundings in Turku, Finland, is shown in Figure 1. One can distinguish the Föri ferry on the left side of the figure and some stationary vessels along the riverbank.

Figure 1. A Lidar point cloud presentation from Aurajoki surroundings in Turku, Finland.

Due to the different strengths and weaknesses of different sensors, it is important to study them in different weather and temporal conditions. Generating such conditions using AI could be more effortless than actually obtaining real data in all possible time and weather combinations.

Marine regulations also mandate observation based on sound. The international regulations in Convention on the International Regulations for Preventing Collisions at Sea (COLREGs) [8] specify several types of sound signals used in different situations [9] and should thus be observed. Sound sensing could also be applied for object and event detection and localization [9]–[11]. Fusing sound sensor data with camera, Lidar, and radar data would also offer improved reliability and accuracy for situational awareness in different conditions.

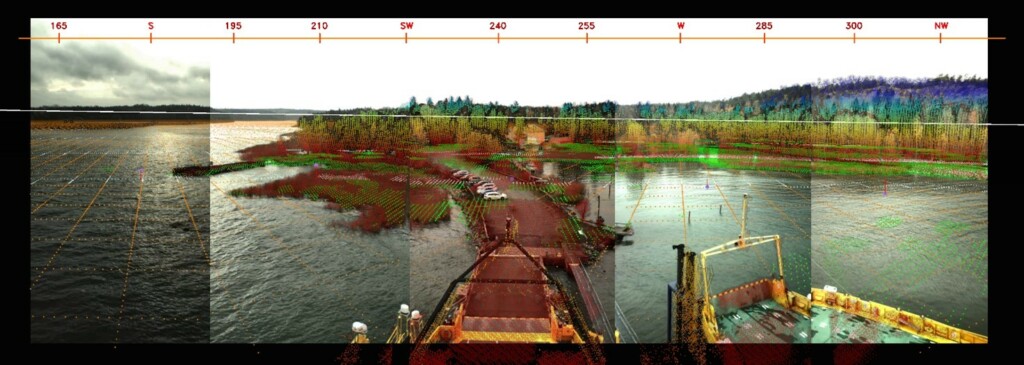

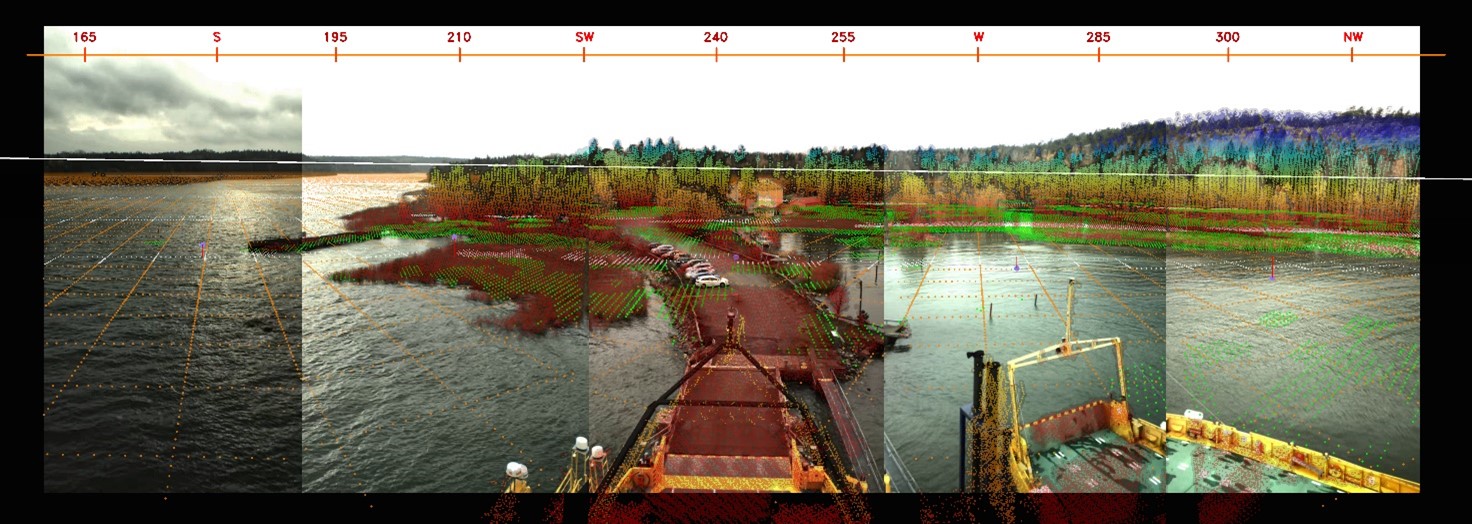

An example of sensor fusion combining GNSS, IMU, visual camera array, radar, Lidar, and map data [12] is shown in Figure 2. It can be distinguished that the visual image is stitched from five different cameras. The sensor fusion has been done on a level, where the measurements from different sensors have been mapped to a common coordinate system and can be collectively used for instance in object detection.

Figure 2. Sensor fusion combining GNSS, IMU, visual camera array, radar, Lidar, and map data. Adapted from [12].

One of the biggest obstacles in machine learning and sensor fusion research is the lack of domain-specific datasets [13]. In the ARPA project, we will lend a hand to the research community by collecting sensor data from marine environment and publishing it via our data platform. The data will be published in openly, so that all of the researchers in the field can exploit it in their research.

Juha Kalliovaara, Senior Researcher, Turku University of Applied Sciences

References

[1] D. Nguyen, R. Vadaine, G. Hajduch, R. Garello and R. Fablet, ”Multitask learning for maritime traffic surveillance from AIS data streams,” 2018 IEEE 5th International Conference on Data Science and Advanced Analytics (DSAA), doi: 10.1109/DSAA.2018.00044

[2] Xinyu Zhang, Chengbo Wang, Lingling Jiang, Lanxuan An, and Rui Yang, ”Collision-avoidance navigation systems for Maritime Autonomous Surface Ships: A state of the art survey,” Ocean Engineering, Volume 235, 2021, 109380, ISSN 0029-8018.

[3] F. Farahnakian and J. Heikkonen, ”A Comparative Study of Deep Learning-based RGB-depth Fusion Methods for Object Detection,” 2020 19th IEEE International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 2020, pp. 1475-1482, doi: 10.1109/ICMLA51294.2020.00228.

[4] F. Farahnakian, J. Poikonen, M. Laurinen, D. Makris and J. Heikkonen, ”Visible and Infrared Image Fusion Framework based on RetinaNet for Marine Environment,” 2019 22th International Conference on Information Fusion (FUSION), Ottawa, ON, Canada, 2019, pp. 1-7.

[5] F. Farahnakian, P. Movahedi, J. Poikonen, E. Lehtonen, D. Makris and J. Heikkonen, ”Comparative Analysis of Image Fusion Methods in Marine Environment,” 2019 IEEE International Symposium on Robotic and Sensors Environments (ROSE), Ottawa, ON, Canada, 2019, pp. 1-8, doi: 10.1109/ROSE.2019.8790426

[6] F. Farahnakian and J. Heikkonen, ”Deep Learning Based Multi-Modal Fusion Architectures for Maritime Vessel Detection,” Remote Sensing. 2020; 12(16):2509. https://doi.org/10.3390/rs12162509

[7] C. R. Qi, L. Yi, H. Su and L. J. Guibas, ”PointNet++: Deep Hierarchical Feature Learning on Point Sets in a Metric Space,” 31st Conference on Neural Information Processing Systems (NIPS), 2017.

[8] International Maritime Organization (IMO), ”COLREGs – International Regulations for Preventing Collisions at Sea,” Convention on the International Regulations for Preventing Collisions at Sea, 1972.

[9] J. Poikonen, ”Requirements and Challenges of Multimedia Processing and Broadband Connectivity in Remote and Autonomous Vessels,” 2018 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Valencia, Spain, 2018, pp. 1-5, doi: 10.1109/BMSB.2018.8436799

[10] N. Leal, E. Leal and G. Sanchez, ”Marine vessel recognition by acoustic signature,” ARPN Journal of Engineering and Applied Sciences, vol. 10 no. 20, pp. 9633-9639, 2015.

[11] K. J. Piczak, ”Environmental sound classification with convolutional neural networks,” 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), Boston, MA, USA, 2015, pp. 1-6, doi: 10.1109/MLSP.2015.7324337

[12] J. Kalliovaara and J. Poikonen, ”Maritime-Area Connectivity and Autonomous Ships”, IEEE 5G Summit, Levi, Finland, 25.3.2019.

[13] B. Iancu, V. Soloviev, L. Zelioli, J. Lilius, ABOships – An Inshore and Offshore Maritime Vessel Detection Dataset with Precise Annotations. Remote Sens. 2021, 13, 988. https://doi.org/10.3390/rs13050988