Visualization and User Interfaces

Fifth work package of the ARPA project has been implemented by the Futuristic Interactive Technologies (FIT) research group at Turku University of Applied Sciences. The focus of this work package has been on data visualization, aiming to develop innovative user interface solutions for remote operation and monitoring, with the goal of improving user situational awareness. The work package has been divided into four tasks, and the following is a brief summary of their outcomes as the project enters its final six months.

Situational Awareness Visualization in Virtual Reality

Taking into account the strong maritime ICT cluster in Turku, special attention within the work package has been given to enhancing situational awareness in maritime operations using virtual reality. Since testing remote user interfaces on actual ships or boats has been challenging until now due to limited availability of suitable vessels (new autonomous Tehoteko boat from TUAS has been launched and it could provide a lot of usable sensor data for future projects), the research group has sought to improve their expertise in remote operation by building small-scale concept tests for mobile robots. The majority of the research group’s demonstrations are currently implemented in multi-user environments using their own Metaverse technology (TUAS Social Platform). In December 2022 (Figure 1), the research group showcased their expertise at the ARPA project seminar in the multi-user Arpaverse environment. Arpaverse is a collection of key demonstrations related to remote operations for the ARPA project, built on top of the TUAS Social Platform.

Figure 1: Senior Specialist Timo Haavisto presenting the research groups technology at the ARPA seminar in December 2022.

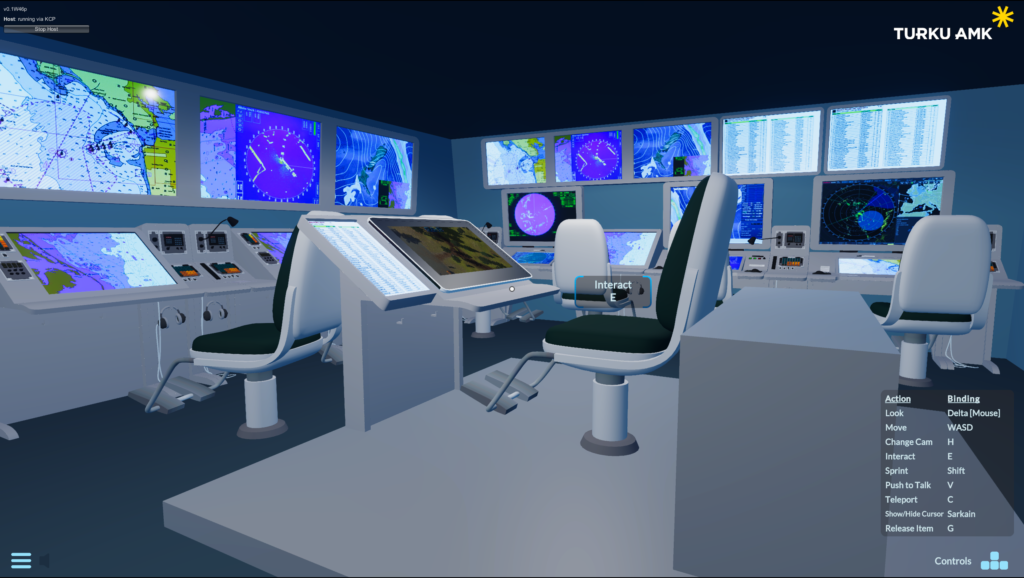

In the Arpaverse environment, various visualizations utilizing neural networks were available, including the identification of vessels from 360-degree video footage, as well as the same video footage augmented with LiDAR and AIS data. These were presented to users as video panels, which are intended to be later integrated into the remote user interface of Turku University of Applied Sciences’ Tehoteko boat within the ROC (Remote Operations Center) environment. The environment also includes a model of a battleship, featuring a prototype view of the command center with multiple monitoring screens (currently displaying static content), and on the command bridge, the ability to view simulated AIS data on a map display.

Figure 2: Prototype of the command center with static data

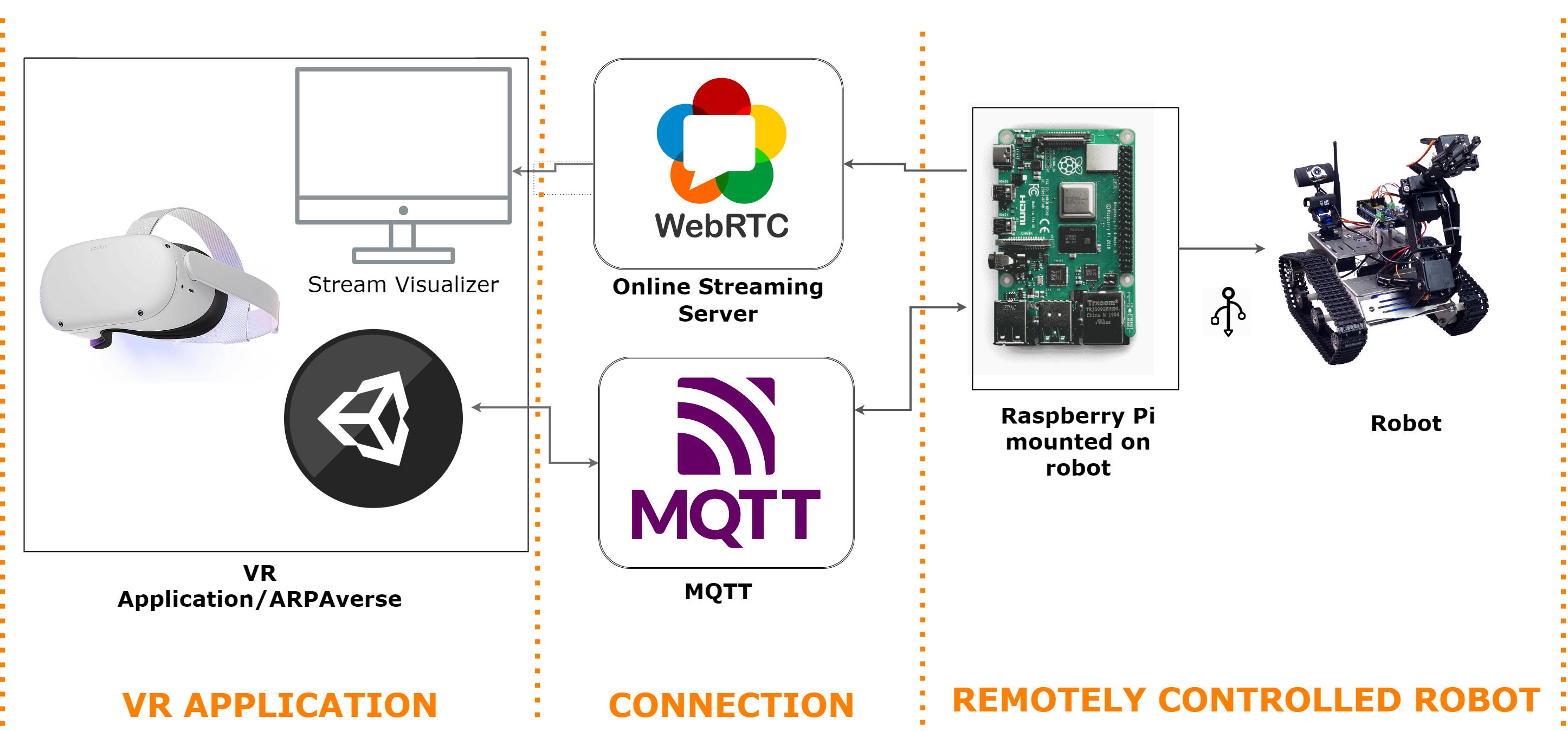

One important feature for real-time situational awareness is a 360-degree view of the operating environment. Finnish company Nokia has been developing a technology called Real-time eXtended Reality Multimedia, or RXRM. It could cut potentially cut off almost all latency from video streaming and also reduce the bandwidth required, making it more suitable in situations where enhanced situational awareness or remote governance is needed. In the Arpaverse environment, upon climbing onto the battleship’s deck, there is an opportunity to explore a 360-degree video view of Nauvo Harbor, demonstrating the possibility of replacing this view with Nokia’s RXRM technology to provide a real-time view from the Tehoteko boat to the ROC environment. The Arpaverse environment also features the research group’s competence center, which includes a laser-scanned demonstration space enabling remote operation of a mobile robot using real-time video footage and a digital twin of the robot, modeled and animated. The virtual environment and the robot communicate using the MQTT protocol, which is popular in IoT and robotics applications. Live video of the robot (Figure 4) is transmitted using reliable and low-latency WebRTC technology (Figure 3), making it suitable for remote robot monitoring. This implementation serves as a concrete illustration of the need to consider video latency in remote user interfaces, as well as the challenges and opportunities related to digital twin visualization. In order to fully replace the video view with a digital twin visualization, the environment and the movable device itself must provide the user with sufficient situational information from various sensor sources.

Figure 3: Simplified diagram of the robot

The research group has been engaged in discussions and early-stage collaboration inquiries with defense industry and defense sector researchers. In addition to the battleship, the Arpaverse environment includes demonstrations of a few tanks, and the remote operation of both has been considered as part of the project’s activities in recent months.

Figure 4: Vessel identification of nearby boats (left image) and remote operation of a mobile robot (right image) in the Arpaverse environment.

Situational Awareness Visualization using Augmented Reality

The research group has access to a wealth of interactive technology, especially considering mixed reality in terms of augmented or rapid device development. Particularly, Varjo’s XR-3 headset offers an excellent opportunity to build solutions that allow the integration of real-world elements into VR visualizations using virtual reality. Varjo has several interesting examples of XR technology utilization, such as Lockheed Martin’s fighter pilot training and Boeing’s astronaut training solutions. Typically, these solutions overlay digital content onto real-world cockpit views to maintain specific professional qualifications that require regular training (e.g., during long space flights).

The research group has acclaimed expertise in maritime simulation environments through the MarISOT project (Best Finnish Educational Application in 2023). This expertise has been considered for further development towards mixed reality, incorporating certain real command bridge control elements into the system architecture and thus into virtual reality training scenarios. Such expertise has been recognized for broader application in various training and remote operation solutions.

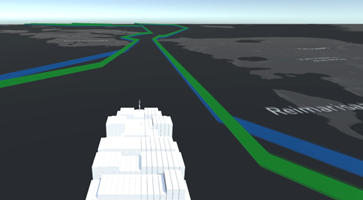

The research group has also continued its design work from previous projects with a company called Brighthouse Intelligence on implementing a VR version of the ROC environment. This ongoing collaboration is well-equipped due to the recently concluded Smarter project, where the research group utilized maritime navigation data from Brighthouse Intelligence and developed a mixed reality prototype of a coal ship arriving at the Port of Helsinki. In that case, the solution projected digital content, including navigational signs, waterway areas, and information about other vessels, onto the maritime view for the user.

Figure 5: Mixed reality elements projected onto the maritime view as the ship arrives at the port.

Remote Control Interface

As mentioned earlier, the research group has developed various user interface solutions for remote control of ships and mobile robots. As remote control progresses towards autonomous solutions, the design of the user interface will undergo significant changes. Monitoring environments will revolutionize traditional thinking, particularly concerning command bridges in maritime operations. One individual will be responsible for managing multiple vessels, rendering the traditional approach of providing information and control views in a command bridge style impractical.

The research group sees tremendous opportunities for various follow-up projects in this area, as they have accumulated considerable expertise in constructing user interfaces that require situational awareness and evaluating the usability, user experience, and efficiency of such interfaces. The development of monitoring environments is an extension of the ROC environment development. Virtual and mixed reality user interface solutions will play a significant role in the development of next-generation remote control and monitoring solutions.

Multimodal Interaction and Neuroscientific Testing Environments

In addition to the remote operation solutions, the research group has acquired a wealth of interactive technology, some of which are closely related to multimodal interaction. During the project, they conducted tests on the use of smart gloves in mobile robotics in collaboration with the Polytechnic University of Valencia and their spin-off company (also linked to the concluded Smarter project’s research exchange). After this experiment, the research group tested the Manus gloves from the field of mechanical engineering, their own Rokoko gloves, and eventually acquired the SenseGlove for further testing. The functionality of these gloves was also of interest to the Finnish Navy (Figure 6).

Figure 6: Commander Captain Pasi Leskinen of the Finnish Navy testing the functionalities of SenseGlove at the MatchXR event.

Based on the results, the technology still requires further development, and currently, the most promising alternative technology is seen in replacing game controllers with sensors that detect finger and hand movements found in XR (Extended Reality) glasses. Generative artificial intelligence has opened up numerous possibilities for studying multimodality in the past year. The research group has integrated OpenAI technology into their metaverse environments, for example ChatGPT (text translation), Whisper (speech-to-text) and DALL-E (image and texture generation). The use of virtual keyboards and display screens enables information retrieval in our metaverse environments using AI. When combined with advanced speech-to-text and text-to-speech technologies, as well as customizable avatar editors, AI-based mentors or tutors can be built for multi-user remote control and monitoring environments to support decision-making.

The development of XR glasses has led to the collection of user’s eye movement data and biosensor data related to user behavior, which can be used to measure both decision-making and user cognitive load. The research group has some experience in utilizing the aforementioned sensors, such as experiments with Varjo XR-3 glasses’ eye movement data and iMotions platform’s biosensors, and this expertise can also be applied to future user interface design. For example, certain remote control functions may be controlled by eye movement. Eye tracking also reduces graphics load, allowing for the presentation of more demanding environments in virtual reality (foveated rendering).

Authors:

Mika Luimula, Executive Principal Lecturer, Turku University of Applied Sciences

Niko Laivuori, Project Engineer, Turku University of Applied Sciences

Víctor Blanco Bataller, Project Engineer, Turku University of Applied Sciences