An Extended Reality Test Platform for Ship Bridges

Background

Extended reality (XR) is a catch-all term encapsulating the family of mixed reality (MR), augmented reality (AR), and virtual reality (VR) technologies. The public mainly associates XR technologies with mobile video games such as Nintendo’s Pokémon GO [1] and social media AR filters [2]. However, there is an emerging industry market for XR technologies. In recent years, applications of XR have, for example, emerged within the fields of automotive and aerospace markets [6, 7, 9], architecture and design [8, 9, 11], manufacturing [11, 12], and education [10], to mention a few. In 2020, a group of Norwegian researchers published a review paper on AR applications for ship bridges [3]. In their article, AR technologies were seen as a way to superimpose digital information on the physical world, either by mounting physical displays on the bridge, having reflecting visualizations of the windows of the bridge, or using an XR headset. A key takeaway from their research was that there are numerous ways to display information in XR environments, but there is no consensus on what layouts and designs to use for ship-bridge applications. The study also concluded that future research on user-centered designs of AR systems is needed. The extended reality test platform, developed in the ARPA project, intends to resolve these open questions.

Extended Reality Test Platform

The goal of the XR test platform is to adapt the existing ship simulator bridges and the remote operation center at Aboa Mare campus [5], which are mainly used for education and training purposes, to early software and XR product testing. By this initiative, we hope to provide a platform that can help researchers and developers bring their software and XR products from prototypes to actual sea trials faster and easier.

The system architecture

The ship-bridge simulators at Aboa Mare are made of consoles and equipped with the same instrumentation that you would find on real ships [5]. The only difference to real ships is that the environment and the ship movement are simulated. Thus, making the simulator bridge an environment as close to a real ship bridge as you can get on land. The XR environment is set up on the simulator bridge in the same way as it would be done on a real ship. More precisely, this means that no modifications to the simulation software have been made to provide the XR visualizations. In practice, only those signals that can be accessed from a real ship bridge are used to augment reality. Navigational data are captured as NMEA messages [14] from virtual equipment such as GPS receivers, autopilots, and gyrocompasses. Information on surrounding ships is limited to data available in the automatic identification system (AIS) [13]. Video streams can be captured by cameras that are either placed in the bridge room or by virtual cameras placed on the simulated ship.

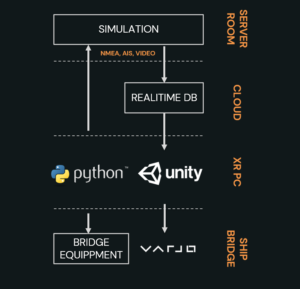

In Figure 1, an overview of the XR-platform architecture is presented. Navigational data and video streams are stored in a real-time database, from which python and Unity modules can access it. The python module is reserved for running video processing and machine learning models, whereas the Unity module is used to produce the visualizations shown by the XR headset. Examples of models that can be run in the python module are object detection, semantic segmentation, perspective transformations, and horizon detection algorithms.

Figure 1. XR test-platform architecture overview.

Design and layout testing

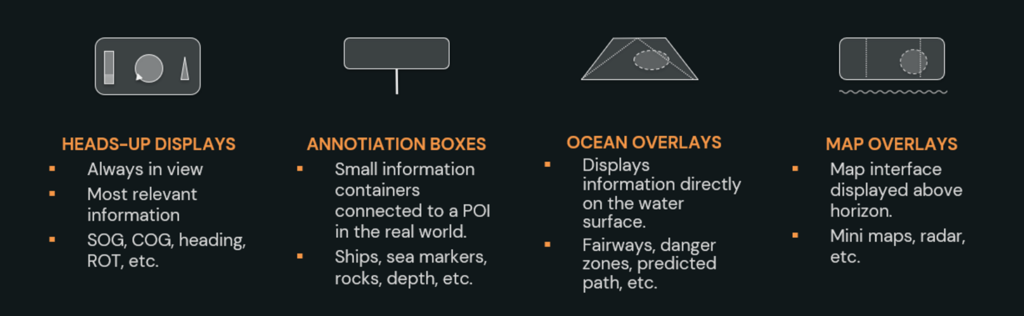

There is a general lack of design principles, guidelines, and requirements for extended reality applications in ship bridges [3, 14]. Some of the most common information display boxes proposed by researchers and developers are summarized in Figure 2, and a heads-up display implemented on a simulator bridge is illustrated in Figure 3. With the XR test platform, we hope to establish best practices for layout design for ship bridges and form a consensus on what XR features are helpful for navigation.

Developing systematic layout testing and design procedures is the first step towards achieving a consensus on layout designs for ship bridges. The OpenBridge design project [14, 15] has made great progress towards achieving this goal. The design practices proposed by the OpenBridge design system are to be implemented and extended on the XR test platform. By utilizing feedback from students, captains, and sea captains operating the ship bridge simulators, we hope to form a consensus on how designs, features, and layouts should be implemented for ship bridge XR applications.

Figure 2. Summary of various information displays used in ship-bridge XR applications [3,4].

Figure 3. An XR heads-up display in use on a simulator bridge. Screen capture taken from of a Varjo XR-3 headset.

Testing and developing of machine learning models

The high degree of similarity between the ship-bridge simulators and the real ships further lets us test and develop solutions to many real-life implementation challenges prior to installing any equipment on a real ship. For example, various enhanced situational awareness systems may rely on machine learning approaches for detecting objects in the vicinity of the ship or for parsing radio communication. The ship-bridge simulator facilitates testing and development of such modules independently as well as complete situational awareness systems. The latter becomes possible when information from the machine learning models is combined with other data available on a ship-bridge, such as AIS and radar data. As a concrete example, one could combine object detection, speech recognitions and AIS data to highlight which of several surrounding ships that you are talking to, or to overlay additional information about ships, objects or landmarks that can be seen from the bridge.

Mikael Manngård, Project Manager, Novia University of Applied Sciences

Johan Westö, Project Manager, Novia University of Applied Sciences

References

[1] Paavilainen, J., Korhonen, H., Alha, K., Stenros, J., Koskinen, E., & Mayra, F. (2017, May). The Pokémon GO experience: A location-based augmented reality mobile game goes mainstream. In Proceedings of the 2017 CHI conference on human factors in computing systems (pp. 2493-2498).

[2] Javornik, A., Marder, B., Barhorst, J. B., McLean, G., Rogers, Y., Marshall, P., & Warlop, L. (2022). ‘What lies behind the filter?’ Uncovering the motivations for using augmented reality (AR) face filters on social media and their effect on well-being. Computers in Human Behavior, 128, 107126.

[3] Gernez, E., Nordby, K., Eikenes, J. O., & Hareide, O. S. (2020). A review of augmented reality applications for ship bridges.

[4] Nordby K. Safe maritime operations under extreme conditions (2019). URL: https://medium.com/ocean-industries-concept-lab/sedna-safe-maritime-operationsunder-extreme-conditions-the-arctic-case-48cb82f85157

[5] Aboa Mare. Maritime Simulators (2022). URL: https://www.aboamare.fi/Maritime-Simulators

[6] Frigo, M. A., da Silva, E. C., & Barbosa, G. F. (2016). Augmented reality in aerospace manufacturing: A review. Journal of Industrial and Intelligent Information, 4(2).

[7] Regenbrecht, H., Baratoff, G., & Wilke, W. (2005). Augmented reality projects in the automotive and aerospace industries. IEEE computer graphics and applications, 25(6), 48-56.

[8] Wang, X. (2009). Augmented reality in architecture and design: potentials and challenges for application. International Journal of Architectural Computing, 7(2), 309-326.

[9] Varjo (2022). Case Volvo Cars: Speeding up automotive design with Varjo’s mixed reality. URL: https://varjo.com/testimonial/volvo-cars-on-varjo-mixed-reality-this-is-the-future-of-creativity/

[10] Yuen, S. C. Y., Yaoyuneyong, G., & Johnson, E. (2011). Augmented reality: An overview and five directions for AR in education. Journal of Educational Technology Development and Exchange (JETDE), 4(1), 11.

[11] Nee, A. Y., Ong, S. K., Chryssolouris, G., & Mourtzis, D. (2012). Augmented reality applications in design and manufacturing. CIRP annals, 61(2), 657-679.

[12] Fast-Berglund, Å., Gong, L., & Li, D. (2018). Testing and validating Extended Reality (XR) technologies in manufacturing. Procedia Manufacturing, 25, 31-38.

[13] European Standard. (2016). Maritime navigation and radiocommunication equipment and systems – Digital interfaces – Part 1: Single talker and multiple listeners (IEC 61162-1:2016).

[14] Nordby, K., Gernez, E., & Mallam, S. (2019). OpenBridge: designing for consistency across user interfaces in multi-vendor ship bridges. Proceedings of Ergoship 2019, 60-68.

[15] OpenBridge (2022). Open Bridge Design System. URL: https://www.openbridge.no/